The first integrated data-taking and analysis in a High Energy Physics experiment.

The procedure of data-taking and analysis at hadron colliders is performed in two steps. In the first one, called by physicists “online”, the data are recorded by the detector, read-out by fast electronics and computers, and finally a selected fraction of events is stored on disks and magnetic tapes. The stored events are then analyzed later in the so called “offline analysis”. An important part of the offline analysis is the determination of parameters which depend on the data-taking period, for example alignment (determination of the relative geometrical locations of the different sub-detectors with respect to each other) and calibration (precise determination of the relationship between the detector response and physical quantity being measured). The whole data sample needs then to be “reprocessed” by the computers with this new set of parameters. The whole process takes a long time and uses a large amount of human and computer resources.

In order to speed-up and simplify this procedure, the LHCb collaboration has made a revolutionary improvement to the data-taking and analysis process. The calibration and alignment process takes place now automatically online and the stored data are immediately available offline for physics analysis. The new procedure allowed LHCb to present the first LHC run 2 physics results at the EPS-HEP conference just a week after the data-taking period ended. In the following the new procedure is described in more detail.

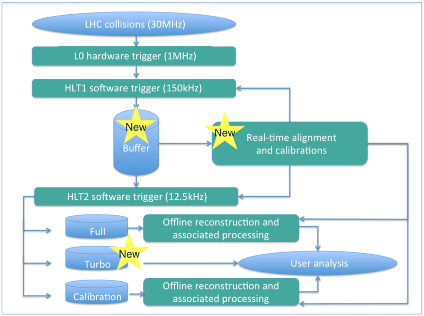

Current technology does not allow all LHC proton-proton collision data to be stored and analysed. An event selection procedure is therefore necessary following the scientific goals of each experiment. This selection procedure can be made by fast electronics (“hardware trigger” in physicists language) or/and by computers (“software trigger”). At LHCb the fast electronics (hardware trigger) reduces the 30 million per second (30 MHz) LHC proton-proton collision rate (as visible by the LHCb detector) to 1 MHz using the characteristic properties of beauty and charm particle production and decays. The data are then read-out from the whole detector and are transferred at the 1 MHz rate to around 300 electronic cards as the one shown in the left image. The fast calculations performed in these cards allow the data volume to be greatly reduced by removing the content not containing information about the current event. The data from all these cards are then transferred to a predefined computer in the LHCb farm, shown in the right image, situated near the detector 100 m underground. The data transfer speed of the network between the cards and the computer farm is so high that it would be capable to carry the entire mobile phone traffic of a country such as Switzerland. The farm contains about 1800 computer boxes with a total of about 27 000 physical processors, nearly doubling the available processing power with respect to the LHC run 1 period.

The software trigger computer programs, called also “High Level Trigger (HLT)”, run in the computer farm. At the first stage, HLT1, the less interesting events are removed and the data flow rate is reduced to 150 kHz. The selected events are stored in a 5 PB disk “Buffer” (see image). An automatic procedure is then run which aligns around 1700 detector components and calculated about 2000 calibration constants, all within a few minutes. The alignment and calibration parameters are then used in the second stage of the software trigger, HLT2, processing the data stored in the Buffer with the same quality as would be the case in the offline analysis. The additional selection reduces the data flow rate to 12.5 kHz and the reduced data sample is stored for future analysis. The output data are directed into two samples. The larger volume “full” data sample allows the whole offline reconstruction and associated processing to be redone as required. The “turbo” data sample keeps only information necessary to perform physics analysis with the offline quality obtained during the HLT2 processing. This “turbo” sample was used to obtain results presented at the conference just a week after the period of data collection ended, as was mentioned above.

This new approach of making offline-quality information available to the trigger, and performing physics analysis directly on the trigger output data, represents a paradigm shift in data processing for particle physics experiments, and will have significant consequences for the future physics programme of LHCb.

Click the images for higher resolution. Read more in the Cern Courier article and in the LHCb seminar at CERN.